HMI – Human Machine Interface, the link between machines and their human users. In Part Four of this series (you can check out Part One, Part Two, and Part Three here), I’m trying to look into the future of HMI. I say “trying” because future prediction is notoriously difficult and ends up, pretty much, as a guessing game. Never mind all the complex theory behind the psychological factors that limit our view of the future and the “Marketing” vision of the future, which becomes biased to what we have now and how to sell more of it. The big problem is this – our present and future is powered by technology and technology always progresses quicker that we imagine and is always accepted slower than we hope. So, having set myself up for failure, let’s speculate a little.

As with the Chinese proverb “A journey of a thousand miles starts with a single step” we need to know where we start from to have any idea of where we might go, and it could be a long journey. Where we are right now is a “manual” (relating to or done with the hands) method. By this I mean, although via current HMI we don’t manually interact with a process, we do manually interact with the HMI that interacts with the process. We touch buttons on a control panel, screen, keyboard, or mouse. There might be some automation or Voice Recognition control, but this all needs humans to have some physical interaction, after all it’s a “Human” Machine Interface.

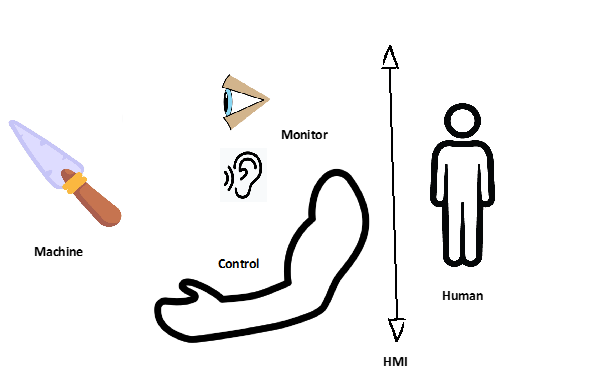

Looking back over the previous parts of the HMI blogs, we have moved from direct manual interaction with tools…

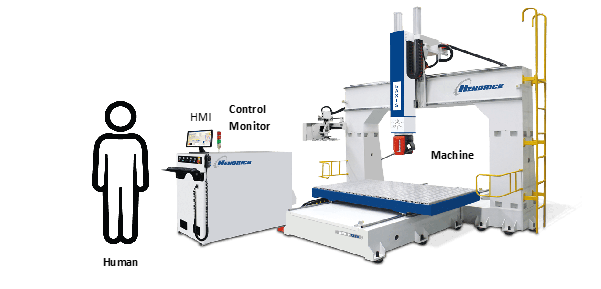

To interacting with machines that use tools…

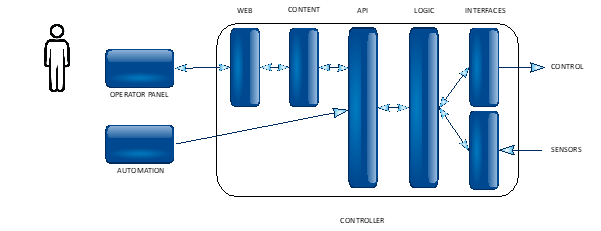

To splitting down the control element and injecting Remote Control, Monitoring and Automation…

And improving the visual design and interaction that the HMI provides…

I’m not suggesting an immediate move to what is termed “Brain-Computer Interfaces” (BCI), because, although that might be technologically possible in the longer term, doing it breaks the concept of “technology is always accepted slower that we hope”. Maybe someday, but not soon. I’m also not suggesting the “Father Christmas Machine”, where machines make machines that make machines, etc. After all, where’s the human in that?

As with everything IT, IoT, HMI, etc., there is much research into what is needed in the future that focuses mainly on improved functionality, reducing costs, improving efficiency, reducing environmental impact, and, as with everything else now, Artificial Intelligence (AI). With much of it starting with statements about the billions of dollars that the HMI Industry is worth.

The short term.

Most of this type of research just focuses on what I have talked about in the previous parts of this Blog series. So, I won’t go over that again!

The medium term.

There will be a “more of the same” set of improvements to what we already have. Fine tuning the current systems to just work better. On-Machine Applications that can take advantage of the under the covers Application Program Interfaces (APIs) to assist the Human Operator in making better, more efficient, and more informed decisions about controlling processes. Wearables devices that are aware of the presence of the Human Operator and the hand gestures made, so that physically touching the control panel is no longer required. Also, wearables that provide Augmented Reality (AR), goggles that overlay information on the Human Operators view of what is going on. Nature Language Processing (NLP), so the system can be told what to do by interpreting what the Human Operator says rather than just actions or simple commands. The combination of which remove the need for a Control Panel as the Interface and set a foundation for greater collaboration with an Operator Team.

The long term.

Here when it all goes a bit more in the direction of Science Fiction with topics such as Artificial Intelligence (AI) and the Brain-Computer Interface (BCI).

AI – A very popular subject now, but I would argue that what we have now is little more than souped-up Natural Language Processing (NLP). AI can act on what we say/type, but it does not understand what we mean. Luckily, AI does not have to go the Skynet path and become self-aware to understand what we mean, it can do “move the chair to the centre of the room” but has trouble with “move the chair to a better position”. After all, what does “better” mean? Easy for a human, much more difficult for a machine. When problems like this are fully solved, AI can become a fully working partner in operating systems.

BCI – This would remove the need for all the Wearables, AR, NLP, gesture recognition, etc. by “reading our minds” to know what we want the machine to do. There are two types of BCI, invasive (a wire in the Brain) and non-invasive (sensing Brain Waves). Obviously non-invasive BCI would be easier for us to accept. This is, of course, way off in the future.

I would love to hitch a ride in Dr. Brown’s DeLorean, fire up the Flux Capacitor, and go see how this works out.